May the Prompt Be With You

Modern human-to-human communications emphasizes text over speech. Brevity over clarity. Ironically, can chatting with a computer help us with our interpersonal communications?

“The report isn’t impactful. Can you punch it up?”

“That’s interesting”

“Can you run the meeting?”

“I need this asap”

My phone buzzes and 99% of the time, it is either one of my two sons (whose calls are allowed to break through the Do Not Disturb) or a spammer. No one else calls any more. Well, maybe my hair salon to confirm an appointment if I ignored the text. The same goes for work. We text using Slack or Teams, and in lieu of a phone, we jump on a Zoom.

The reliance on text makes me nuts. Whether at home or at work, texts are cryptic and imprecise, and I tend to read too much into them. When a text starts out with “Diane, I am wondering …” I’m fixated as to why they put my name. Are they mad at me? (My husband does not have the same negative reaction to seeing his name in a text. I’m sure that means something.)

“I need this ASAP” - does that mean drop everything, send tomorrow, or get to it whenever it is truly realistically possible?

These vagaries are what the academics call underdetermined linguistic representations or (ULR). And they create stress, writes Anna Drożdżowicz in her paper (Synthese, May 2022). There is a tremendous amount of “decoding” that goes on to try and figure out what the message is.

How much energy have you spent looking at a text and wondering the tone, the intent, the meaning?

And indeed studies like this and this show that texting impacts communications. We miss nonverbal cues, faces that say - what the hell is she even talking about. Or, the raised eyebrows that say, tell me more. They answer with an “oh, ok,” and what does that mean? Is it passive aggressive for “I hate it,” or an honest, “I never thought of that, it sounds like a great idea.”

We also shorthand our text and get that thought out in as few characters as possible. We mix acronyms and idioms: ngl thatz sus.1 Brevity over clarity, unstructured vs structured, there is tone without voice, and it’s targeted - yet impersonal. The receiver has to work so hard to decode the message.

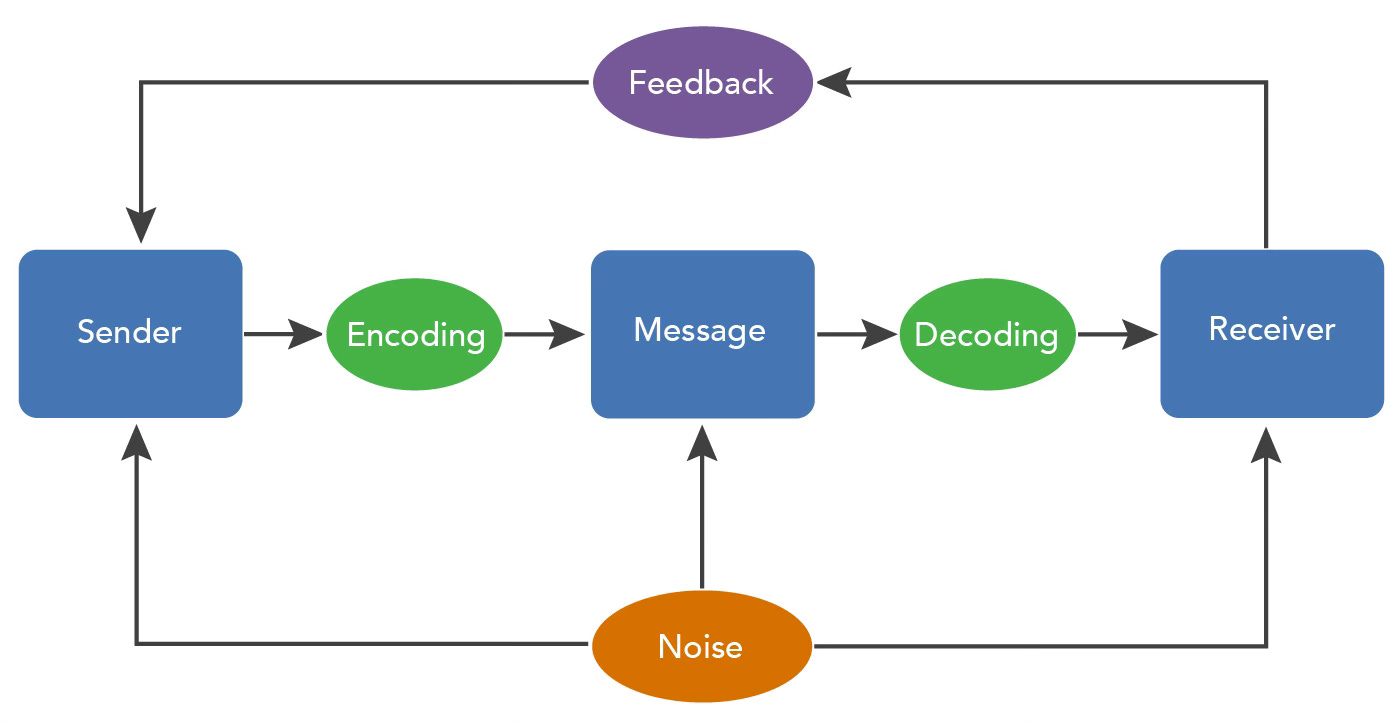

That thought reminded me of this image of the communication process that I saw in my communications class – back when Reagan was president.

The simplicity of the diagram belies the many ways communications can fall apart, from creating the wrong message in the wrong way, to sending it to the wrong person, using the wrong medium, and not receiving any feedback - verbal or non verbal - in return. And the push toward, and reductive nature of, text communications is not helping.

So what’s the point of all this? To ban text and technology? No, not at all.

But organizations do need to cultivate strong interpersonal communication skills. Imprecise communications increases sales cycles, impacts customer expectations, slows down understanding. In medicine and the military it might be life or death.

And as pithy as we are with humans, we are downright loquacious with computers. Can interfacing with computers help us with our human-to-human communications?

For about a decade we have had Siri (2010) and Alexa (2014) in our lives. They understand simple tasks - “remind me” or “play this” or “turn the thermostat up.” And weirdly, most people are pretty polite, addressing their voice assistants in full sentences: “Siri, please turn on the flashlight.” But other than confirming our needs, they don’t converse. That all changed with Generative AI (GenAI).

If you are new to GenAI, it is a form of artificial intelligence that learns patterns of text, computer code, graphic images, and sound files, whatever, and can use those patterns to generate new outputs. It relies on large language models (LLM). One of the most popular LLM is GPT - which stands for generative pre-trained transformer. A mouthful of words to say it was trained on massive amounts of unlabelled data to see (and regurgitate) patterns. For example, if you hear “Twinkle, Twinkle” the conventional pattern is to follow it with Little Star. GPT works in much the same way filling in words in patterns. And it does so in the form of generating human conversation.

It is a marvel to watch the words flow down the page in response to a question. And the hype has been astounding. Since GenAI emerged, it that has been heralded for increasing productivity - while derided for killing jobs and hallucinating. The workforce landscape will definitely be impacted, but it will take time to figure out in what way.

But what could happen in the short term, is that the way we interact with Generative AI (GenAI) may (ironically) teach a new generation how to communicate more effectively.

My last company had a generative AI component to its solution, so I started playing around with GenAI in early 2023. For me, GenAI is most notable when compared to a traditional search bar - where you type in a couple words to get a couple zillion responses. (Yes, you can use Boolean to narrow the search results, but Boolean’s arcane commands are on par with the acronyms and idioms of text.)

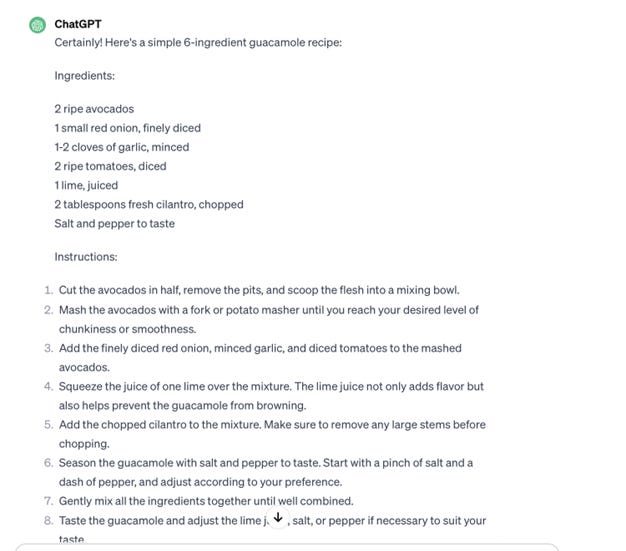

GenAI responds to human-like communications (at least human-like communications of yore). Instead of typing “guacamole recipe” in Google and getting 114 million or so pages to wade through (and closing out pop up ads). In OpenAI’s ChatGPT, in the prompt window I typed in

Give me a Guacamole Recipe

and in seconds it writes:

No more wading! Just the recipe. If I didn’t have certain ingredients, or I wanted an Asian fusion recipe that features fish, and no soy sauce, I could have added all that into my prompt.

Verbosity Over Brevity

As I mentioned, when I first started playing with ChatGPT, I treated it more like a search bar - with just a few words. I was editing an article from a data scientist on Data Normalization. So I started with the prompt “Data Normalization.” I got back a response about “First Normal blah blah blah” (my words, not ChatGPT) that made my teeth hurt. I looked at the premise of the article, which was about what business managers needed to know about data normalization - using an example around kilograms and pounds. I tried again.

Explain data normalization to a business user and why they should care - use kg vs pounds as an example

Now I got what I was looking for. The more context I gave my prompt - such as audience and the metaphor to be used, the better the response. Plus, I appreciated that after generating an answer, it asks, “is this a helpful conversation so far?”

I got to thinking, could GenAI be a bridge to better communication? At the very least, could it help improve communications for those who bother to read this post?

At a meeting with some peers, someone related that in a recent employment offer letter, “Prompts” had been added to the list of things one must never divulge - whether the prompt was authored by the individual or the organization. Prompt’s were becoming a big deal! Shortly after, I saw a post shared on LinkedIn for a free Prompt Engineering course being offered by Vanderbilt University Professor, Jules White. I took the course and enjoyed his style. While he is a computer scientist, there is a storyteller within him. I sent him a note to find out his thoughts about the role prompts might play in communications.

It was Valentine’s Day when Jules White and I finally had a chance to speak - over the phone, because Teams wouldn’t work.

Since his background was cybersecurity I needed to know why White was so interested in prompts. His dad was a professor of creative writing. “He’s in his 80s,” he shared, “when GenAI first came out we spent hours on the phone saying ‘oh, ask it this.’ ‘Oh follow up with that.’ We were having fun, fascinating conversations. I learned by spending time just conversing with it and sort of seeing the boundaries of where it can take the conversation.”

“You just sit there and have a conversation,” said White - likening it to the Louis Malle movie “Dinner With Andre,” where two actors in a restaurant just converse. “Even though I come out of computer science, I was so blown away by every response. I didn’t care what it said. To me, it’s just the fact that it’s responding at all and even in a remotely sensical way.”

Then of course, the professor in him took over and he needed to find out what were the boundaries. “Could it create a funny scene between Faulkner and Queen Elizabeth?”

Ohhh, I had to try that. So I prompted ChatGPT to conjure up a funny scene about colonialism. (What can I say? I was curious!) And it wasn’t horrible, although it first tried to tell me that Faulkner was from South Africa vs Mississippi.

Faulkner: Your Majesty, I must say, discussing colonialism with you feels a bit like a Southern gentleman waltzing through a minefield of etiquette.

Queen Elizabeth II: (Chuckles) Oh, Mr. Faulkner, I assure you, I've had my fair share of delicate conversations over the years. But discussing colonialism with an esteemed author such as yourself adds a unique twist to the dance.

Faulkner: Well, ma'am, if colonialism were a dance, it would certainly be a clumsy two-step, with one partner often stepping on the other's toes.

Queen Elizabeth II: (Laughing) Quite the vivid imagery, Mr. Faulkner! But indeed, colonialism has left its mark in rather...ungraceful ways, shall we say.

It was precisely the type of interplay between White and the computer that he found enjoyable. He would write something and refine it as necessary to get what he was looking for. Which is what is so ironic for me. The tremendous feedback we get from generative AI is what is often lacking in interpersonal communication. White agreed and noted that what is called computer science will fundamentally change. It won’t be about coding but about communicating.

“You will have to be someone who understands how to communicate effectively with each model and express ideas and problems.”

Jensen Huang, CEO for Nvidia, one of the largest chip makers that allows the fast processing of generative AI, nearly set linkedin ablaze with this statement:. “Nobody should have to program. The programming language should be human.”

Does everyone have this capability to express ideas and problems, I asked, fully expecting him to say, no, absolutely not. While he admitted that his sample was small, the feedback he has gotten thus far has been positive if not gushing. Thank you, this just completely transformed what I do at work. I didn't realize you could use it in these ways. It kind of opened my eyes.

“My initial response would be that people are absolutely getting it - and really quickly off to the races in ways that maybe I hadn't even initially anticipated.”

As examples, he shared that an investment banker has been refining her prompts so as to break human confirmation bias by asking, Show me other perspectives. A lawyer in Australia is teaching lawyers to be more empathetic by building client personas. They have a small business, they've just had fraud, by somebody who was a former employee, it's created this huge financial problem for them. What are the emotions this person is feeling, think about how it's affecting their life.

“That's mind blowing,” he said. “A person is using the large language model to help better understand the perspective and the emotions of the person.”

So not only better interpersonal communications, but perhaps a computer can assist us to be more empathetic too?

Who knows, maybe we will speak unto each other as we do with computers.

Additional Articles on GenAI

ngl thatz sus is shorthand for not going to like that’s suspicious (or suspect)

I enjoyed reading this article. I shared it with my teens who thought it was pretty funny that mom's way of writing-with capitals, punctuation, and 'full words' (which to them means someone is upset and being passive aggressive) is how AI understands humans.

Thank you @FierceandFree The more elaborate prompts are essentially essays! I wonder if that isn't how we ought to be teaching AI.